I know what you’re thinking: yet another post about Docker container yada yada yada. There’s quite a lot out there indeed, and here’s mine. I’m putting it out because this has actually really helped me.

Put to the test

Lately I’ve been working on a test suite for an application that uses Elasticsearch and Redis as data backends. The tests are BDD scenarios that run code paths interacting with the data stores. For the most realistic simulation, I’m using real instances of these data stores so the code can interact with genuine APIs in realistic patterns. This way I can validate everything behaves as expected.

Something that has made this considerably easier is the use of Docker containers to run the local versions of Elasticsearch and Redis.

Portable disposability

When running the suite, I need to be able to easily configure, start, stop and tear down the environment to rapidly develop the app and get everything running again from a clean slate any time I make changes. It’s quite easy to install a local Redis on a Mac with Homebrew, and Elasticsearch now has a tool for managing local instances. But these installations aren’t conveniently isolated. Whereas with containers, I can encapsulate the whole services and their data in a single throwaway container, making it trivial to create or dispose of them as needed during testing.

I don’t work alone either. I have team mates who would want to quickly get set up and running tests when contributing to the app with minimal hassle. I could go off and write up a massive README about how to set up services locally but there’s no point when I can instead script a concise set of commands that will take care of everything for them. Oh and it doesn’t matter if you’re on Mac or Linux, you just get docker set up on your machine and you’re good.

Running the suite

I’m going to go through step-by-step how this is set up. The application in question is a Node.js app, so we’ll be using npm scripts to co-ordinate most of this.

Kicking everything off is as simple as writing npm test in your console.

The command stops any previously running test containers and starts brings up the required containers if they’re not already running.

Let’s have a look at what’s being run by npm. These are the relevant lines in the package.json:

The trick here is the pretest script, which invokes itself when you run npm test. Pretest will perform the necessary preparation for our main test script to run.

As you can see, we’re using the docker-compose utility to control the containers. The containers are defined in a docker-compose.yml file, where we can conveniently place all of the docker specific configuration. Let’s take a look.

We have container definitions for Elasticsearch, Redis, and our nodejs container in which our test suite will run. The other containers are linked to it allowing them to communicate over docker’s network. As mentioned later, we need to configure our code to use the docker container hosts, which are named exactly the same as their containers. The last bit of trickery is to use the correct ports, which are unique to each container and can change, so we pull this out of the rather verbosely named env variable.

Our node container uses the barebones alpine Linux image, weighing in at a feathery 30-odd MB. This is ideal because the smaller the image, the quicker containers run.

The application code is loaded into the container by the volumes directive. The container has access to all the folders specified when it runs. For our application code, we mount the current directory to the container’s /app path.

In a nodejs workflow, we usually npm link modules. Docker volumes don’t support symlinks, so any modules we have linked in node_modules won’t be visible to the container. To work around this, we specify the linked modules as additional volumes and mount them at their paths under /app/node_modules. This has the same effect of loading our local files as npm linked modules would have locally. All this makes us free to test our code changes in the container environment quickly while we pursue the zany new developments in our app.

The final part is defining the command to run our suite. In our case we have to make our code aware of the ephemeral Redis port. This is defined by docker inside the container environment when it’s run. All we have to do is pass it through as an env variable our app expects, which is done as part of the test command. Call me out when I say “variable our app expects”, because this is easily hand-waved. When I was setting this all up I needed to think about how the app would connect to the correct places. Quite a few changes were needed to make the hosts and ports overridable, so don’t shy away if you’re in that boat.

Test time

With all that in place, docker can set up, link, and run our containers together from one npm test command. The test suite runs and all the data is encased by the containers. If we want to inspect the state, we can connect to the data stores, which are still running, and probe them with tools like curl for requesting Elasticsearch’s API, or redis-cli for talking to Redis.

When we’re done, or simply want to tear down the whole thing, npm kill-services does the deed. Then we’re free to set everything up from scratch once again.

Stub out the tricky bits

The beauty of all this is it’s all self-contained on the local machine. But docker can’t take all the credit for that. It’s partly due to the test suite which employs some techniques to minimise reliance on external dependencies.

I mention this because our app also needs an external MySQL database, for which we don’t have very good tooling for setting up & filling with mock data in an automated fashion just yet. And actually for the large part this would be overkill, when stubbing is totally sufficient. So stubbing is exactly what we do, with the excellent Sinon module. The specifics of which I won’t go into here, but it’s very useful for overriding functions that fetch information from external resources like MySQL with fake versions returning pre-defined, controlled results.

Therefore with all external services enclosed in containers and remaining ones stubbed, our test suite is entirely local and can run something that resembles an entire stack in production on my local machine. Online or offline.

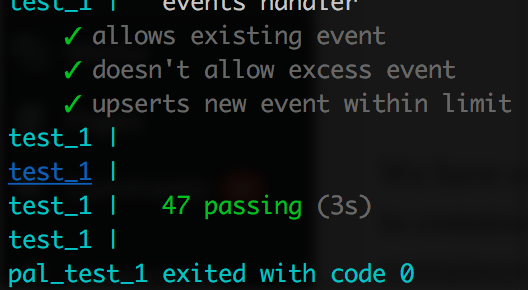

As a result, I run tests much more often. I add more tests, because it’s no longer painful to run and manage them. And I’d expect this to make me more productive. In practise that’s difficult to measure, because in software there’s always a TODO list a mile long, but I certainly feel better for having this, if at least from seeing those little green ticks sparkle in the test report.